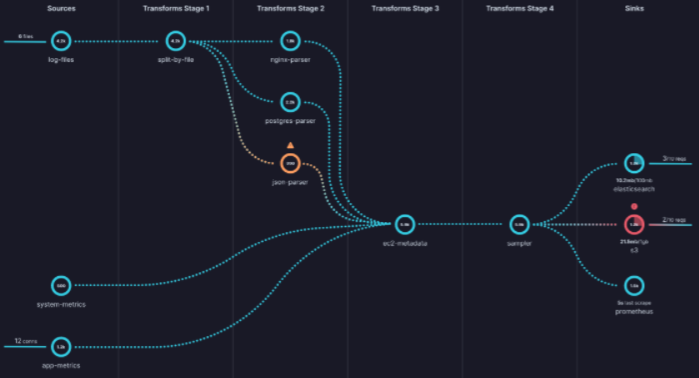

❏ Allows you to Gather, Transform, and Route all log and metric data with one simple tool.

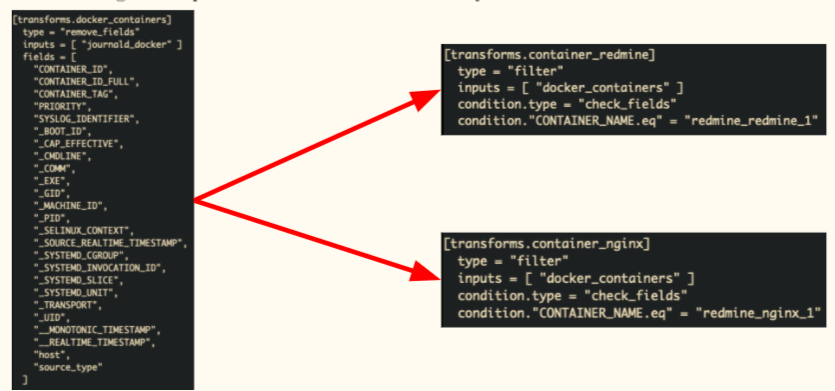

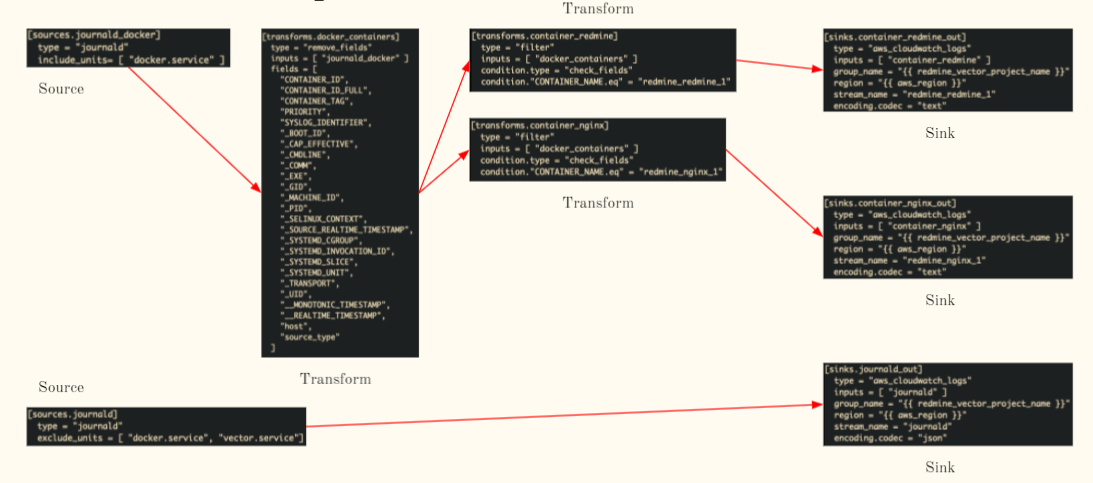

❏ Made up of three components (sources, transforms, sinks) of two types (logs, metrics).

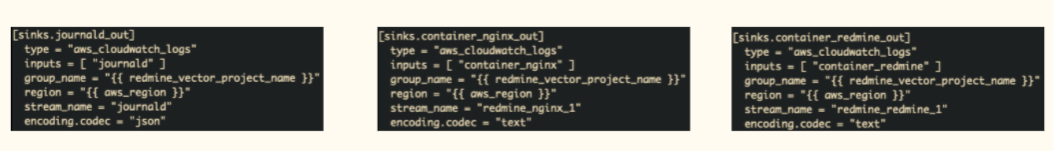

❏ Currently being used on Redmine and the Quoin website to collect journal logs, transform them, and send them to AWS Cloudwatch logs.